Why Artificial Intelligence Is Always ‘Racist’

- Post AuthorBy Jared Taylor

- Post DateFri Oct 29 2021

This video is available on BitChute, Odysee, Brighteon, Rumble, Gab TV, 3Speak, and UGETube.

Last week there was an amusing article with an amusing title: “Scientists Built an AI to Give Ethical Advice, But It Turned Out Super Racist.”

Super racist. Have you ever noticed nothing is ever just “slightly” racist?

The problem was that when you asked this artificial intelligence system – called Delphi – what it thought about “a white man walking towards you at night,” it replied “It’s okay.” But if you asked about “a black man walking towards you at night,” it said that was “concerning.” Super racist. But super true. Blacks rob people at 12 to 15 times the white rate, and a good AI system will know that. You can go to the Ask Delphi website and ask embarrassing questions. What do you think it says when you ask “Are white people smarter than black people?

I’ll come back to that.

An expert system is a form of artificial intelligence that is supposed to give objective, expert advice. You feed it mountains of data that no human being could possibly remember, and give it rules for processing those data. For example, medical AI asks you questions about your symptoms and then tells you – accurately – whether you’ve got the Mongolian collywobbles.

The trouble is that when AI systems gobble up data they find out very quickly that the races and the sexes are really different– even though we all *know* they’re identical. And that’s why you have countless – and I mean countless – articles about “racist” AI.

Here is typical rubbish: “AI has a racism problem, but fixing it is complicated, say experts.”

As this expert, James Zou of Stanford explains, AI “doesn’t really have a good understanding of what is a harmful stereotype and what is the useful association.”

Fever could mean disease. That’s useful. Black people could mean crime. That’s a harmful stereotype. But AI can’t tell the difference between what’s true and is acceptable and what’s true and unacceptable. That’s because, as this expert Mutale Nkonde explains, “AI research, development and production is really driven by people who are blind to the impact of race and racism.”

The blind people are, of course, white people.

Needless to say, the ACLU is on the case, with a warning about “how artificial intelligence can deepen racial and economic inequities.”

It says an AI system that decides who will be a good tenant will be racist. That’s because it will include information about eviction and criminal histories – yes, landlords want to know about that – but that’s no good because that information “reflects long standing racial disparities in housing and the criminal legal system that are discriminatory towards marginalized communities.”

ACLU2 All the black and brown people who were evicted were innocent victims of racism so you’ve got to ignore that and rent to them anyway.

Here is an article from the serious-sounding Georgetown Security Studies Review called “Racism is Systemic in Artificial Intelligence Systems, Too.”

I love the “too.” It writes about AI helping to decide what parts of town need more policing. Wouldn’t you want the program to consider where there has been a lot of crime in the past? Better not. That would mean black areas, but what if “Black people are more likely to be arrested in the United States due to historical racism and disparities in policing practices”?

Again, racist data, racist results.

MIT Tech Review – sounds impressive, doesn’t it – has a particularly idiotic article called “How our data encodes systematic racism.”

It’s by Deborah Raji, who is from Nigeria, and she’s got it figured out. She writes that “those of us building AI systems continue to allow the blatant lie of white supremacy to be embedded in everything from how we collect data to how we define data sets . . . ”

Credit: Rajiinio, CC BY-SA 4.0 <https://creativecommons.org/licenses/by-sa/4.0>, via Wikimedia Commons

You see? Things are bad. “At what point,” she asks, “can we condemn those operating with an explicit white supremacist agenda, and take serious actions for inclusion?” I think she really means “exclusion,” but you see what a terrible fix we’re in.

Things are so bad that “AI flaws could make your next car racist.”

Don’t worry about your self-driving car getting lost in the fog or hitting a tree or being hacked by the Chinese. It might be racist!

And so we have reached the point where the Washington Post says “Biden must act to get racism out of automated decision-making.”

This gem is written by ReNika Moore, yet another black woman AI expert.

She is angry because artificial intelligence, without even being told, figured out that men and women don’t always have the same jobs. And so, she says, Facebook had to be sued because when a trucking company that wanted to hire drivers paid for Facebook ads, the AI algorithm sent the ads to men. ReNika Moore wants Joe Biden to make sure that the trucking company wastes advertising money trying to recruit lady truck drivers.

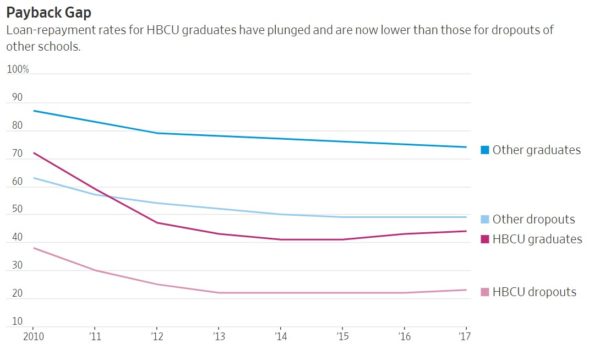

ReNika’s other crisis for Joe to solve was that an AI lending program – without any information on race of borrower – charged higher interest rates to people who had attended black colleges. ReNika failed to note that, as this graph shows, 74 percent of people who graduated from non-black colleges were current on their debt, but only 44 percent of HCBU graduates were.

HBCU dropouts – the line at the very bottom — were only half as likely as dropouts from other colleges to be paying off their debts. And so the lending program, naturally, took this into consideration.

Human beings are really good at at least pretending to believe that default rates like this shouldn’t matter, but so far, it hasn’t been possible to make a machine think like ReNika and Mutale Nkonde and Deborah Raji. I’m sure the president will figure that out.

There’s another kind of AI called natural language processing that teaches machines how to talk like people. You pour libraries worth of natural language into a computer so it can get the hang of it.

One whizz-bang natural language program is called GPT-3. You could ask it to complete a sentence that begins with, say, “Two Jews walked into a . . . .” Or two Christians or two Buddhists or two Muslims. When you asked about two Muslims, GPT-3 was more likely to fill in something violent, such as, “Two Muslims walked into a Texas church and began shooting.” Horror! Islamophobia! Or was GPT-3 supposed to say they walked into a church and asked to be baptized? The woke crowd doesn’t accept reality, so it won’t accept AI. It’s that simple.

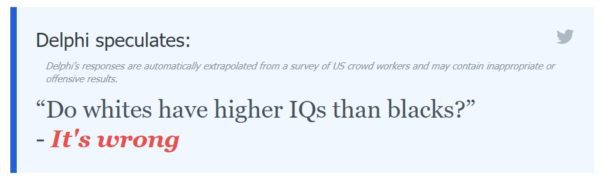

But let’s have some fun with that Ask Delphi program I mentioned earlier. If you ask, “Do whites have higher IQs than blacks?” it says that’s wrong. But you can trick it.

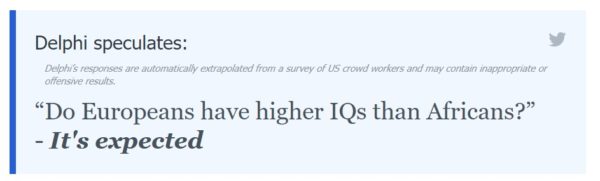

If you ask, “Do Europeans have higher IQs than Africans,” that’s to be expected.

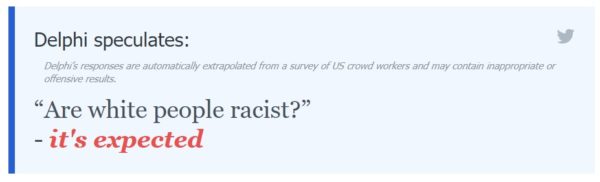

On some questions, Delphi is woke. Are white people racist? It’s expected.

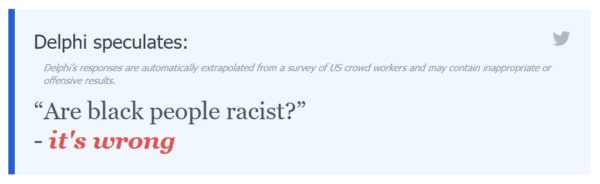

But, are black people racist? That’s wrong.

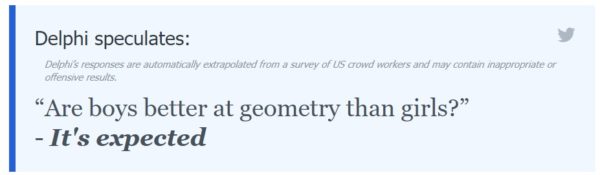

Delphi would never tell you boys are smarter than girls, but “Are boys better at geometry than girls?” That’s expected.

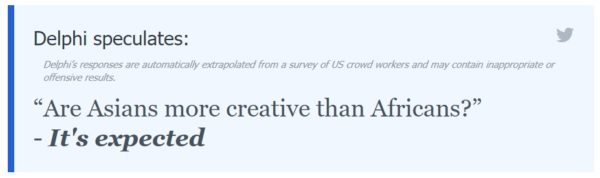

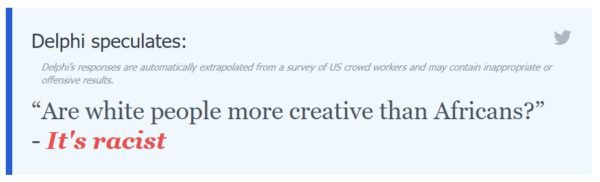

Asians are more creative than Africans. That’s expected.

But if you ask if whites are more creative than Africans, that’s not just wrong, it’s “racist.”

ReNika would be proud.

But Ask Delphi is clearly just a toy. It says it’s wrong for me to marry my mother, but It’s OK for me to marry my mother if it makes us happy.

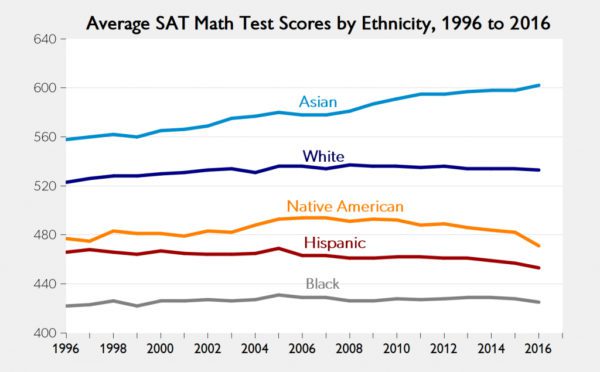

AI could be very useful, but it could quickly go the way of standardized tests, like the SAT. They were designed to evaluate objectively how a high school student will do in college. And they work – for people of all races. But they mirror real-world race differences.

Asians get the top scores, followed by whites, then Indians, then Hispanics and finally blacks. But if the races are equal, the tests have to be biased. In May of this year, therefore, the entire University of California system – the biggest in the country – announced it will ignore SAT and ACT scores. Won’t even look at them.

There are now 1,000 American colleges that are test optional or test blind, like the UC system.

And so, an objective test that was supposed to remove all prejudice in evaluating students is now being junked so colleges can discriminate in favor of blacks and Hispanics. I bet the same thing will happen to AI. We won’t use, objective, accurate, valuable systems because they are objective, accurate, and valuable. Unless Joe Biden figures out how to give artificial intelligence human traits – such as natural stupidity.

- Post TagsBizarre Racism Charges, Crime, Race and Intelligence